AI chatbots and agents provide interesting and useful opportunities for data professionals to become more effective and to make certain tasks more convenient. In the right scenarios, these tools can augment individuals and teams to do more and overcome technical barriers. However, you must invest time and effort to educate yourself on, set up, and get value from these tools.

In a previous article, we wrote about how model context protocol (MCP) servers can enable interesting scenarios for both conversational BI and BI development. However, to use AI effectively, it is best that you do not focus on specific models, tools, or features. This is especially true now, since they are evolving so rapidly. Instead, it makes more sense to think about the various building blocks that you combine to get the best results. None of these building blocks are sufficient alone, and all of them are required to some extent when you use AI for BI development

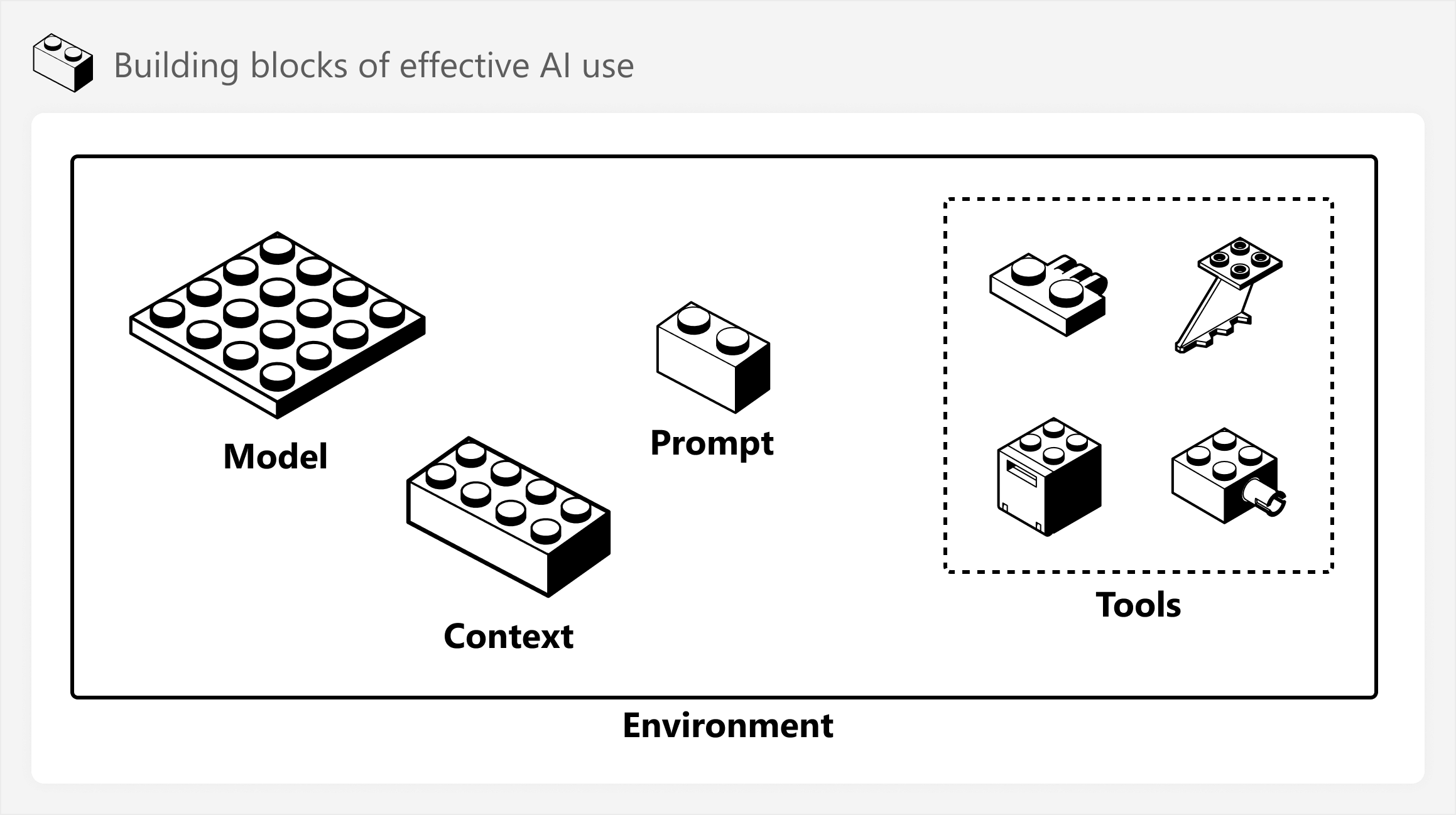

Consider this by using the following analogy that shows these building blocks as Lego bricks.

In general, the building blocks that you combine in your AI workflow include:

- Large Language Model (LLM; model): Which LLM you will use. Different models have different capabilities and require different optimization, depending on your scenario.

- Context: What information you will give the model to improve its results. Giving sufficient context (but not too much) is pivotal as you scale your use of AI beyond ephemeral prompts in chatbots.

- Prompt: The request or instructions you provide as input to an agent. Like context, writing good prompts is important to get good outputs, particularly when you use tools.

- Tools: How the agent will interact with its surroundings. Tools can be built-in or custom, including MCP servers, command-line interface (CLI) tools, and others.

- Environment: The space where the agent operates and acts; what it can and cannot see or touch. Managing your environment is pivotal to ensure safety and also limit context.

Elaborating on each of these building blocks takes significant time, effort, and examples. So, we have written a whitepaper about it for SQLBI+, including detailed explanations with four different scenarios. We introduce these scenarios below with one diagram and example, each. However, in the whitepaper, you can find over 50 different diagrams, examples, and sample files to get you started. We also have a 90-minute video walkthrough of each scenario, with examples like the following:

- Using MCP servers to interact with Fabric, run semantic-link-labs code, view mock-ups in Figma, and view published reports in the browser.

- Letting agents command and execute the Fabric CLI to take actions in Fabric.

- Using agents to build a Power BI report end-to-end.

- Delegating tasks to an asynchronous agent for development.

This article is just a high-level overview of the capabilities and considerations in each scenario. If you want a more detailed walkthrough, check out the full content (samples, whitepaper, and full video) on SQLBI+. SQLBI+ is a paid subscription service that allows us to regularly deliver more detailed, higher-proficiency content that focuses more deeply on important topics.

Scenarios

We currently see four different scenarios with which you can leverage AI and agents for BI development. We provide a high-level overview of each, below. Note that these scenarios are not proposing best practices or patterns to repeat. Rather, they are reporting observations that we see in the market and experience, ourselves. These scenarios are also not mutually exclusive, and they generally scale in their maturity and process complexity.

Scenario one: chatbot tools

This is the simplest scenario, where a user leverages an LLM chatbot to assist in answering questions and generating code. The chatbot has access to built-in tools and basic context provided by the user in their prompt, conversation history, or as part of a tailored experience like Copilot in Power BI. In this scenario, a user relies mainly on their prompt and out-of-the-box experiences to generate useful outputs, which they typically either download or copy for use in other tools.

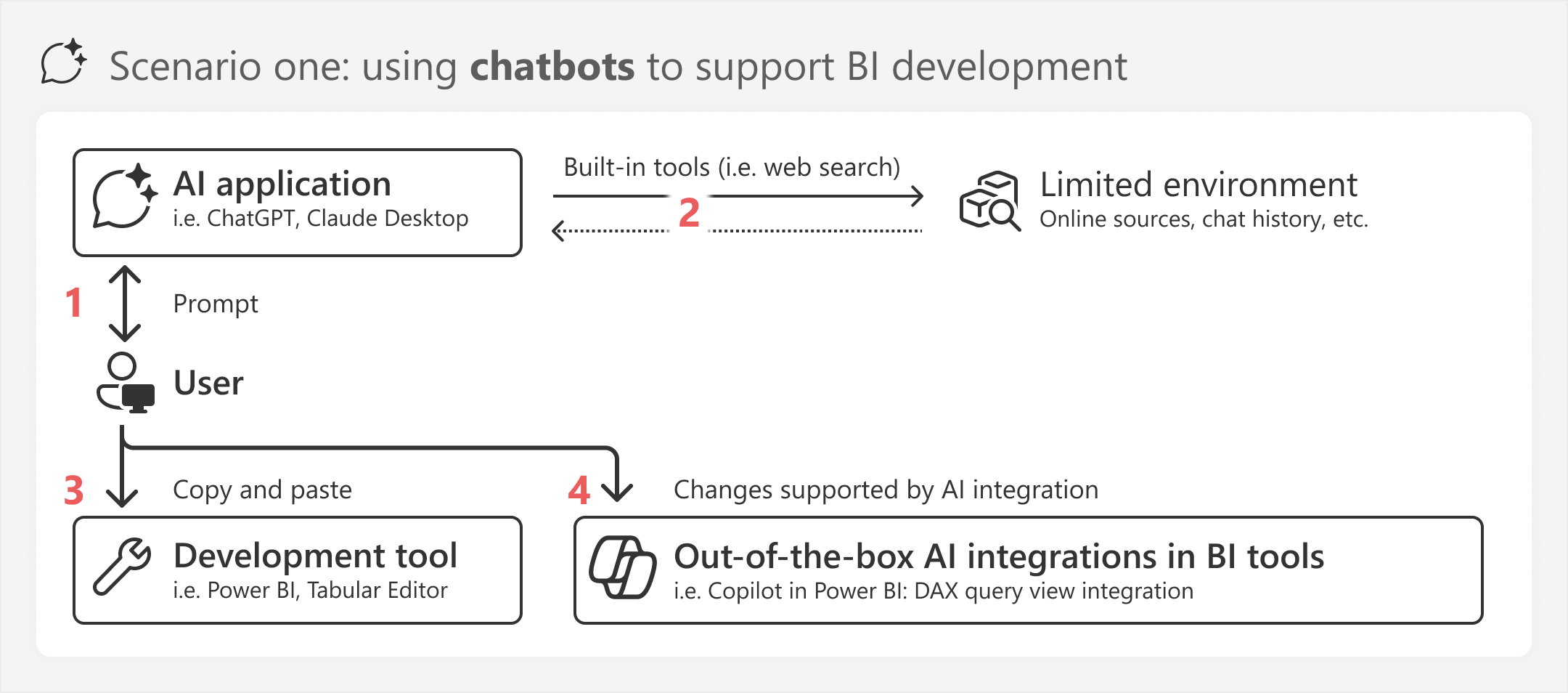

The diagram for this scenario is as follows.

The diagram depicts the following processes:

- A user engages with a chatbot via an AI application like ChatGPT, Claude Desktop, GitHub Copilot in Ask mode, and others. In the prompt, users provide context, like files, images, and other information. Most chatbots use models that have reasoning capabilities and multimodality. Users can provide basic user instructions.

- The chatbot has built-in tools for limited engagement with its environment, including web search for retrieving up-to-date information from online sources, or some form of memory, like searching over the chat history.

- The user typically provides code or context to the LLM, and then receives code back that they can paste into their development tool, like Power BI or Tabular Editor. The LLM might also provide outputs like generated images that the user can upload (such as canvas backgrounds or icons).

- The user might also leverage out-of-the-box AI integrations in their BI tools, like Copilot in Power BI. An example is using Copilot to suggest DAX measures in the DAX query view, or suggesting a report page.

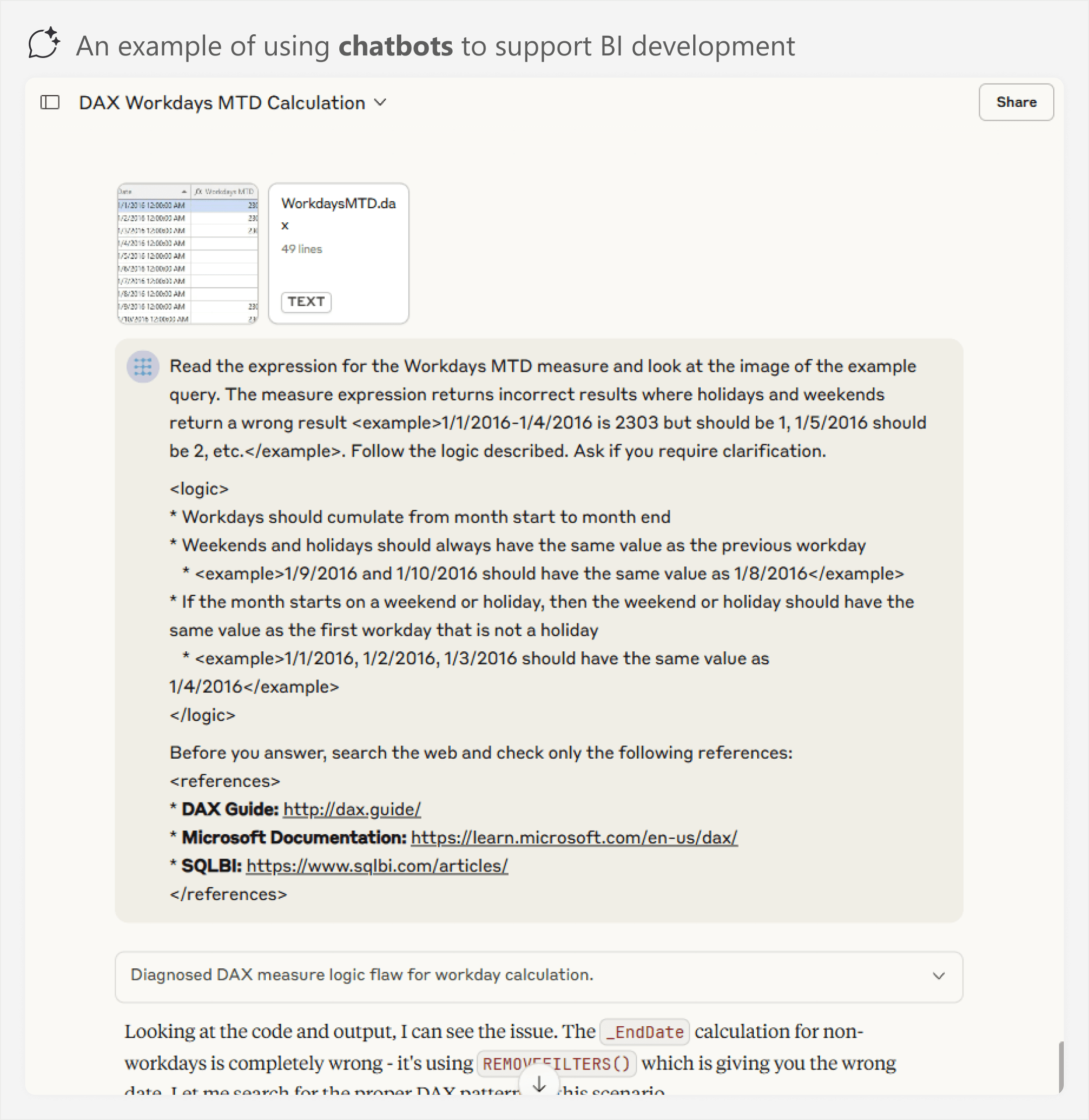

Here is an example of what this scenario looks like in Claude Desktop.

The previous example is a bit verbose, but still quite simple. However, you can see how the user relies mostly on their prompt and some built-in tools (like web search) to get help. In this case, the user might copy the DAX code to their model to test it.

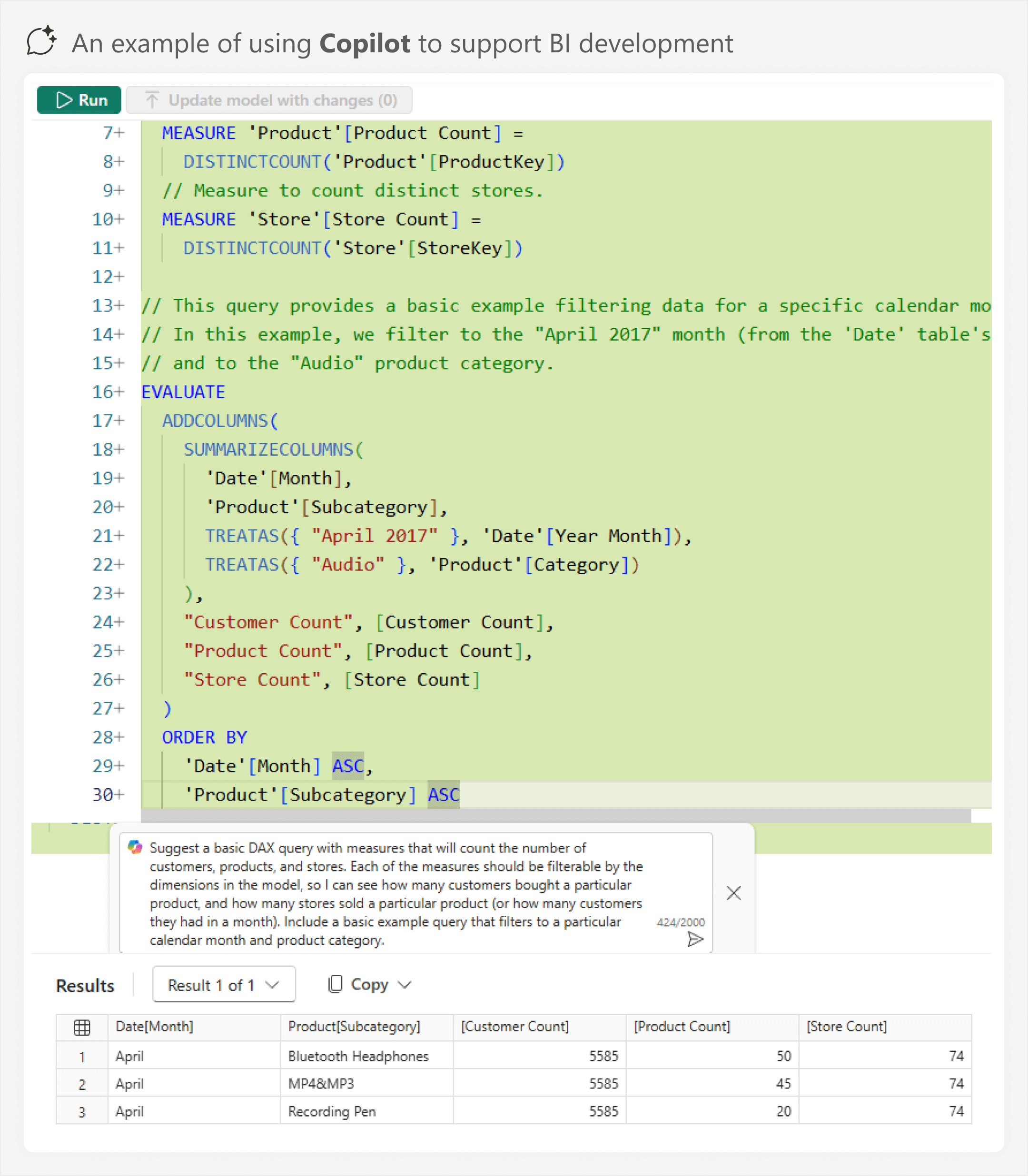

In this scenario, you can also use out-of-the-box AI integrations in BI tools, like Copilot in Power BI. An example is the Copilot experience in the DAX query view, which might support you in semantic model or report development by helping you to generate DAX measures and queries.

The previous example produces incorrect results, but it might be a good starting point for beginners. The correct result could involve using the key columns in the Sales fact table and not in the dimension tables (which cannot filter each other). There are various valid use-cases and benefits for using Copilot in this experiment.

Copilot in the DAX query view can automatically read model metadata as context. It also has various pre- and post-processing steps to improve outputs compared to other, generic AI applications; however, you have less visibility and control over Copilot’s capabilities, configuration, and which LLM is used.

Most people are familiar with this scenario. It requires minimal setup and has limited process complexity. However, it also has limited utility and higher risk for wasting time or falling victim to LLM mistakes or fallacies.

Scenario two: Augmented chatbot experiences

In this scenario, users augment the chatbot experience by better leveraging context (such as instructions and remote repositories) and custom tools (such as MCP servers). Tools allow the chatbot to better retrieve context or even take actions in an environment. You can use MCP servers to make changes to or develop a semantic model or report. However, the MCP server tools will be limited in their capabilities, and the experience is not very convenient without a supporting user interface.

Here, there is a pre-requisite that you use Power BI Projects format (PBIP) so that the model metadata is readable and writable by both humans and AI. It is also recommended that you use remote repositories like GitHub or Azure DevOps Repos for source control, since you can connect them to AI applications.

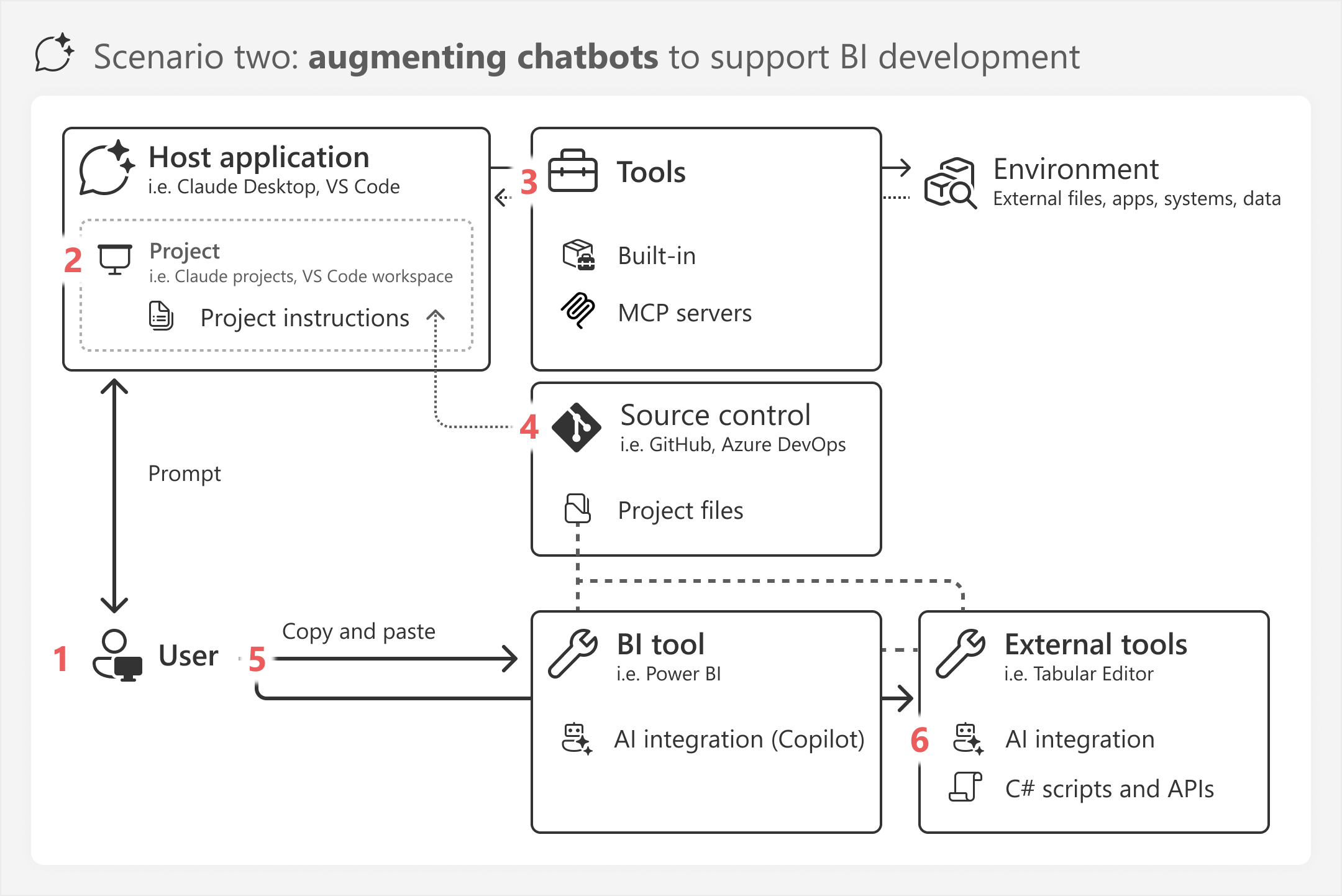

The diagram for this scenario is as follows.

The diagram depicts the following processes:

- The user still uses a chatbot in an AI application, such as Claude Desktop or VS Code, simultaneously with BI tools and external tools, depending on their preference and workflow.

- The user sets up a project or folder in the host application that contains special context and instructions for the chatbot.

- The user leverages a combination of built-in tools and custom MCP servers. These could be MCP servers from official vendors, open-source MCP servers from trusted sources and extensions, or custom local MCP servers the user has built and uses for their own needs.

- The user connects a remote GitHub repository to a Claude project or opens the cloned repository in VS Code. The LLM cannot change these files and can only read them into context.

- Changes to models and reports are made by copying and pasting code into the BI tool or external tool.

- Some external tools enable making modifications directly, such as C# scripts in Tabular Editor which can call and use the APIs for various providers of LLM services and products.

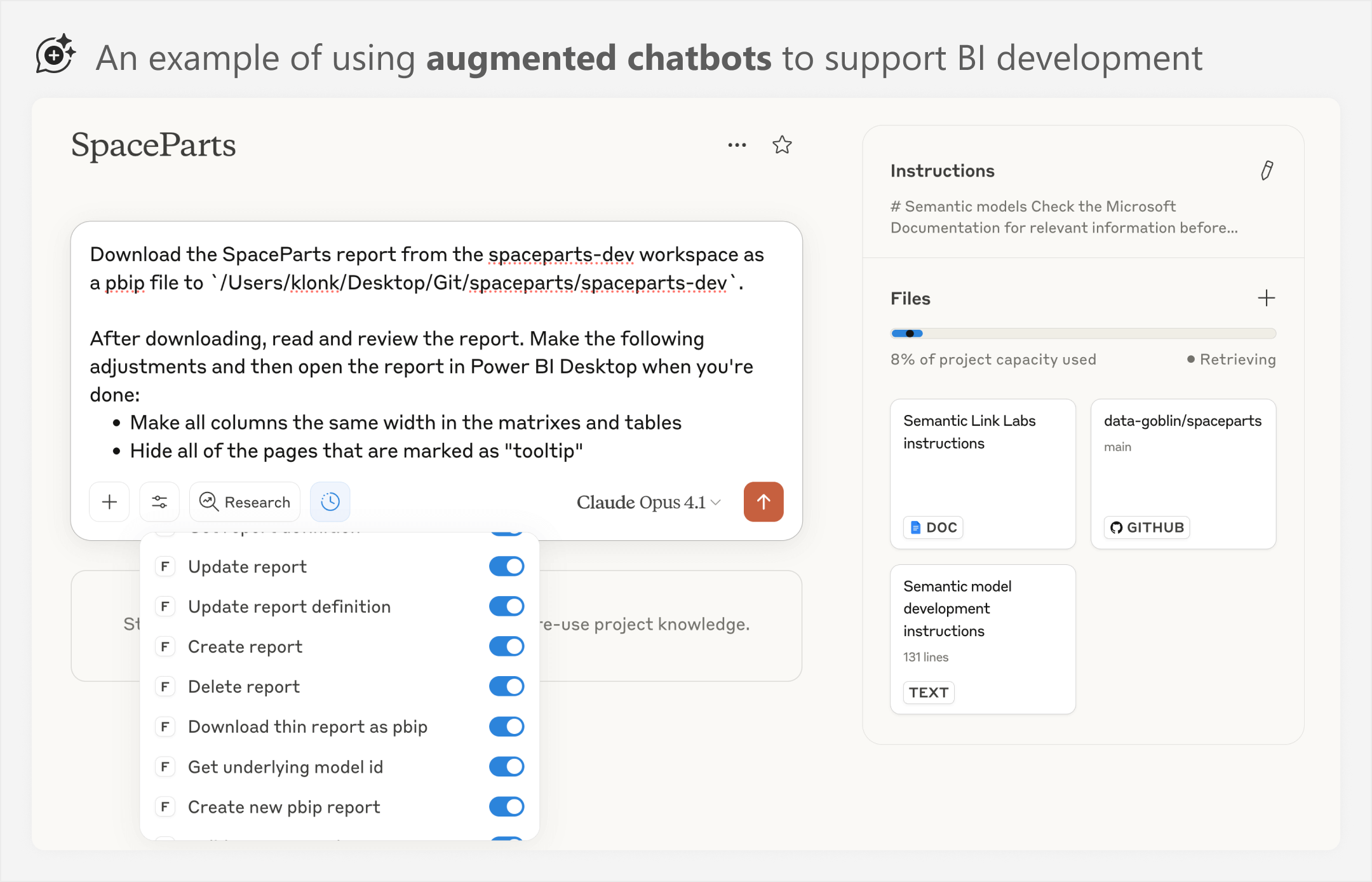

You can see an example below of the augmented chatbot scenario.

The example shows a Claude Desktop project where the user no longer relies on ephemeral prompts alone, but invests more in providing context via instructions and metadata. They also use custom MCP servers to provide context and tools for the model. This example is a static image that shows the different parts, but our previous article and video shows several examples of this.

This scenario requires more time and effort to create and curate both context and tools. Here, the user exercises more autonomous intent based on what they want the chatbot to do, and what kinds of outputs they expect. The scenario thus requires additional planning and critical thinking from the user.

Scenario three: Agentic development

This scenario describes the use of agentic tools to help develop and manage semantic models, reports, and other artifacts or processes in your BI platform:

- In simple scenarios, you can use the agent to search and make limited changes to your model or report metadata. An example could be parameterizing Power Query steps or copying conditional formatting from one column to another in a table or matrix. This scenario is an extension of scenario two, where the user needs to provide limited instructions and tools, mainly leveraging the built-in tools to make changes.

- In more advanced scenarios, you can use the agent to facilitate larger development tasks. An example could be building an entire report from a wireframe or mock-up, before a human takes over for polishing and revisions. This advanced scenario requires a certain process maturity and consistency, and significant effort in creating and curating context. Even so, in their current state, agents require substantial supervision and orchestration.

Agents that work on Power BI metadata files (like TMDL, PBIR, and others) have a tendency to make mistakes, not only because of the sparseness of this information in training data, but also because Power BI semantic models and reports are complex.

In this scenario, the user focuses on the use of agentic coding tools either in an integrated development environment (IDE) or on a terminal scoped to a particular project. The user focuses most of their effort on orchestrating agent planning and curating the context with granular instructions, while also iteratively improving and validating agent outputs. The agent can read and write project files, and exhibits autonomous (but supervised) use of built-in tools, MCP servers, and CLI tools to gather context or take actions in its environment. This scenario can be done in combination with scenario two.

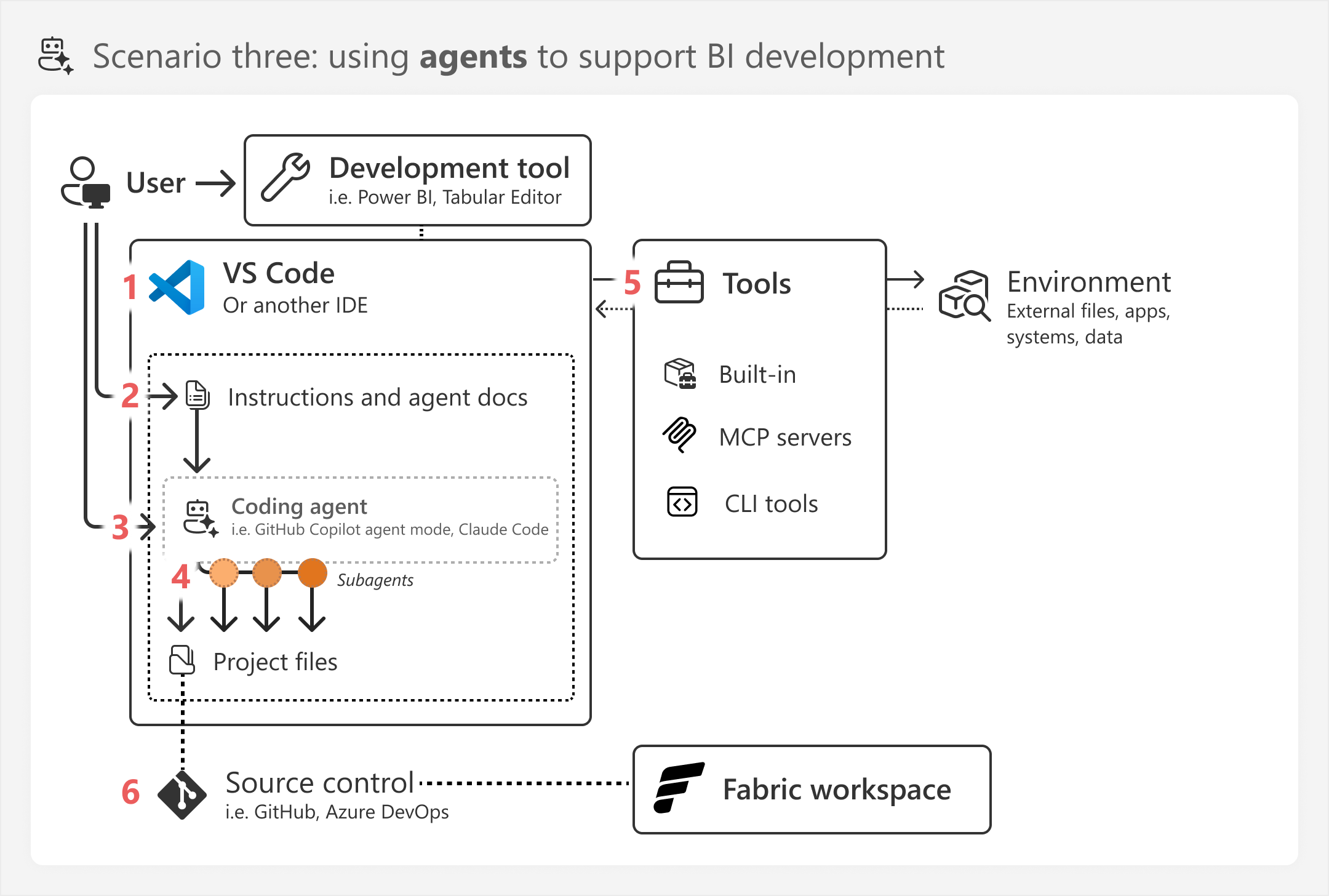

The diagram for this scenario is as follows.

The diagram depicts the following processes:

- The user uses agents inside of an IDE (but they could also use only a terminal for CLI agents).

- Most of their time goes toward creating instructions, design documents, and agent documents to curate a set of rich but specific and well-written context for the agent to perform their task well. The context must accurately reflect the business and technical reality.

- The user prompts the coding agent to help with development tasks. The bulk of report and model development is still performed by the user; it is not realistic to expect that agents will be able to handle the majority of end-to-end development tasks for Power BI any time soon.

- The agents can read and write changes to code directly. If supported, the user can configure specialized subagents that the main orchestrator can use in parallel or in sequence for certain tasks.

- Users configure agents to have access to built-in tools, MCP servers, and also command line tools. These tools give the agent extensive control over their own context and capabilities in the environment. More context is needed to use CLI tools successfully.

- The user makes use of remote repositories to structure the work and restore previous versions when necessary. They can set up streamlined deployment to a Fabric workspace for testing, by using Git integration, tools, or custom deployment methods.

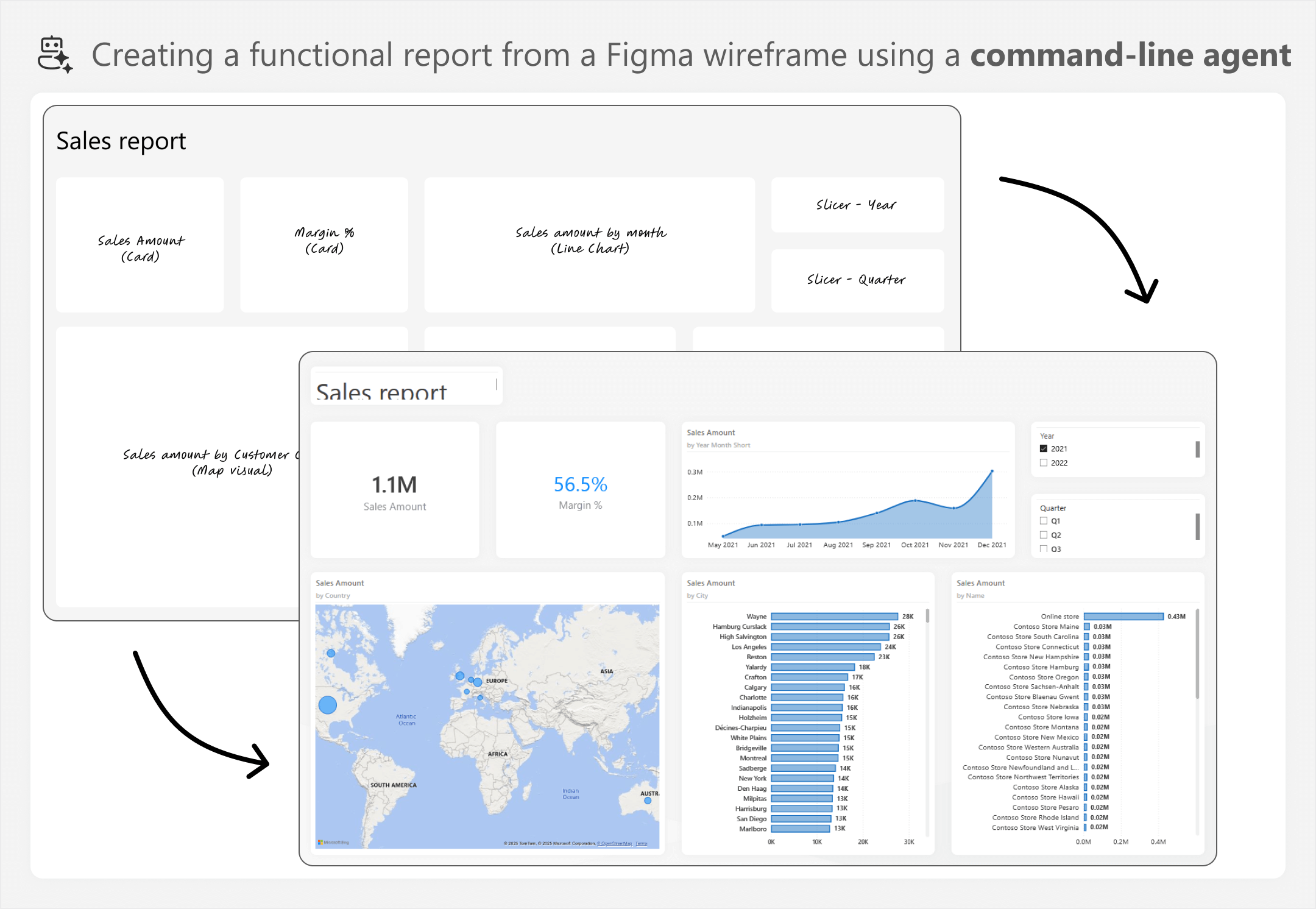

Here is a more sophisticated example, where a main Claude Code agent orchestrates a team of specialized subagents to build a report based upon a wireframe in Figma.

You can see this demonstrated in the below animated video. The demo is sped and cut due to size limits. You can view the full, uncut demonstration and explanation on SQLBI+, as well as the sample files used to make it:

Here is what happens in this short demo:

- A command-line agent (Claude Code) uses an MCP server to read the Figma wireframe.

- The user runs a re-usable prompt to plan reports.

- The agent infers from the design and conversation history what model to use.

- It searches for the model and maps fields to visuals by controlling the Fabric CLI.

- It produces a plan for user review.

- The user reviews and adjusts the plan, then approves it by starting development.

- The agent builds a Power BI Project and delegates to a team of specialized sub-agents the building of the page and visual metadata. Subagents are executed in parallel.

- The orchestrating agent reviews the resulting metadata against the spec and schemas.

- The user approves the development and asks the agent to deploy to a Fabric workspace.

- The agent publishes the report, fixes minor issues.

- The agent controls the user’s browser to view and validate the published, functional report.

Please do not over-extrapolate this example; AI cannot make reports or semantic models for you. Rather, we use a complex example to demonstrate a variety of processes and considerations, and how an agent might contribute to the process at various points, where it is necessary and where it is helpful.

To reiterate, the example is more sophisticated. You can also use agents for simpler tasks, but the example showcases more completely what is possible. Indeed, obviously this scenario is more advanced. It takes a lot of time and effort to set up and use it properly beyond simple searches and batch changes. This is not something that “just works” and it requires a good understanding of the nuance and application of critical thinking to get value from it.

Scenario four: Asynchronous agents

This scenario involves a user delegating specific tasks to asynchronous agents for the agent to work on the task autonomously without supervision. The task could be something simple like reviewing a pull request (PR), or more sophisticated like making changes in the background. This scenario is distinct from scenario three, since the human does not orchestrate or supervise the agent’s activities directly with prompts, in a chatbot session. Rather, the human orchestrates it indirectly via instructions (and possibly an initial prompt) and then reviews the result (typically as a new PR that the agent opens).

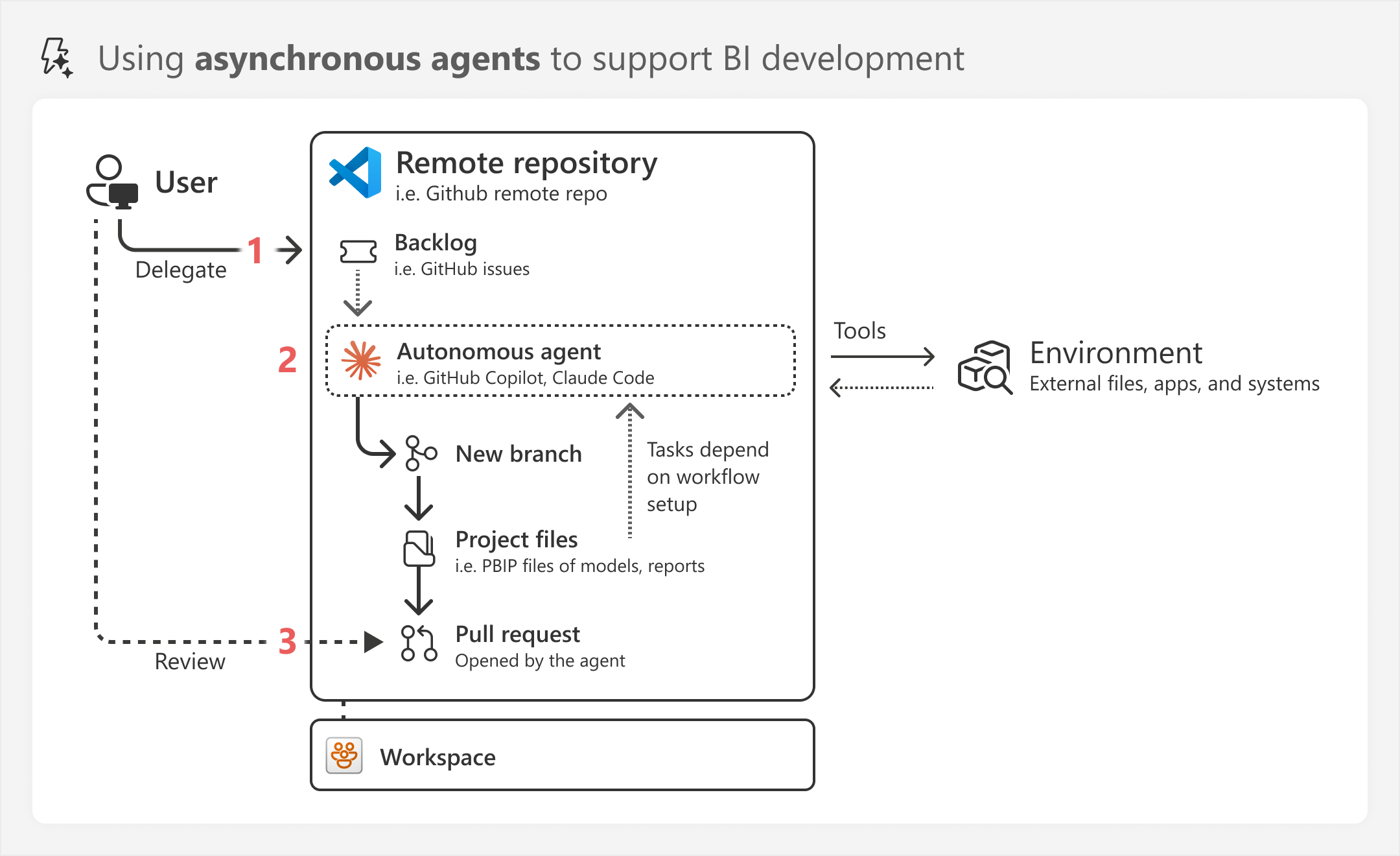

The diagram for this scenario is as follows.

The diagram depicts the following processes:

- The user can delegate a task to an agent via an issue backlog or a comment on an existing issue or PR.

- The agent can start working on the task asynchronously. It has access to tools to act in its environment, and its exact workflow is configured by the user. Typically, the agent will create a new branch, modify project files, and then notify the user via a new comment in the issue or PR.

- The user can then review the comment and the changes. If the agent made changes to project files, the user can review them in a new PR.

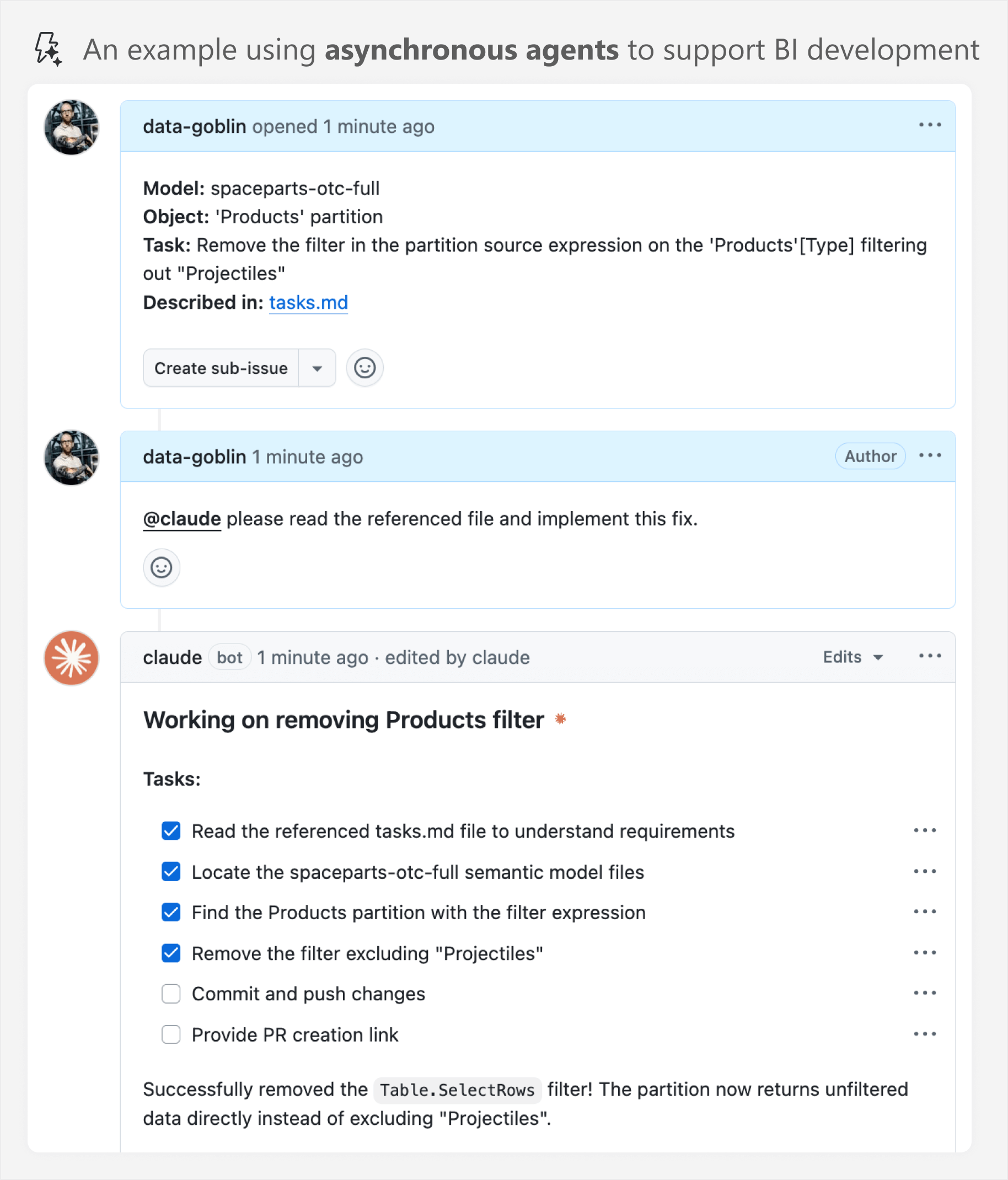

You can see an example of this below, where Claude Code works asynchronously on tasks described in a GitHub issue.

In the previous example, the user delegates a development task to Claude Code, which proceeds stepwise to work on it in the background, without human supervision. Currently, asynchronous agents have limited realistic applications beyond very finely-scoped tasks and reviews.

On security and cost

In this content and the associated whitepaper, we do not focus on particular models or tools. Rather, we focus on the high-level concepts, scenarios, and use-cases. As such, we provide no detailed information about cost or security considerations, at this time. The objective of this content is simply to provide a detailed overview of the possibilities and a realistic view of the thought, time, and effort required to apply them.

Conclusions

AI chatbots and agents provide some interesting opportunities to augment your BI workflows beyond simply copy-pasting code and generating background images of your reports. If you are willing to invest sufficient time and effort, you can set up agents that can help you with a wide range of tasks. However, doing so does require a certain process maturity, first. If you are still figuring out the basics, then adding AI and agents on top is probably not going to help you; instead, it might just make your mess more complicated.

If you are interested, please check the whitepaper at SQLBI+ for more details, guidance, and examples, including relevant sample files and full demonstrations of each scenario.

Kurt Buhler

Kurt Buhler